By Tom Brant

In the Oregon desert, Facebook has 2,000 iOS and Android phones running tests 24 hours a day.

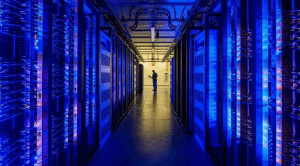

PRINEVILLE, Ore.—There are a lot of ways to make sure you have fast Internet. You could move to a Google Fiber city. You could select one of our Fastest ISPs. Or, you could build your house next to a Facebook data center.Take the first Facebook data center, for instance. Opened in Prineville, Ore., in 2011, it has served as the model for every data center the social media giant has built since then, from Sweden to Iowa. It consumes so much bandwidth that it requires multiple ISPs serving the area to fortify their networks, bringing local residents along for the ride.

You might think of the Prineville data center, together with Facebook’s other facilities, as the collective heart of the world’s largest social network: they receive a deluge of requests for photos, status updates, and news feed refreshes from the site’s 1.65 billion active users and respond as fast as possible, similar to a heart pumping oxygen into the bloodstream.

The Prineville facility is approaching its fifth anniversary, and handling data requests for things that Mark Zuckerberg likely had no idea Facebook would be doing when he dreamed up the idea for the company in his Harvard dorm room.

Prineville still stores photos and status updates, of course, but it also now has thousands of Nvidia GPUs supporting Facebook’s artificial intelligence research, as well as 2,000 iOS and Android phones running tests 24 hours a day. This helps the company’s engineers increase the power efficiency of its apps, which include Instagram, Messenger, and WhatsApp, in addition to Facebook.

Facebook Data Is ‘Resilient, Not Redundant’

The first thing you notice about the Prineville facility is its sheer size. Rising out of the tree-studded high desert of central Oregon a three-hour drive from Portland is not a single building but four (and that doesn’t include another huge data center Apple recently built across the street, aptly named Connect Way). One of the Facebook buildings can fit two Walmarts inside, and is roughly 100 feet longer than a Nimitz-class aircraft carrier.

Inside are servers that hold several petabytes of storage, and which Facebook engineers constantly upgrade by replacing components. Out of the 165 people who work full-time at the data center, 30 per building are assigned just to service or replace power supplies, processors, memory, and other components.

Focusing on “resilience, not redundancy,” as Pratchett puts it, is possible because Facebook has the luxury of storing multiple copies of data in its other locations around the world, from Lulea, Sweden, to Altoona, Iowa. So instead of having two or more of everything, it can focus on strategic equipment purchases at each location, like having one backup power generator for every six main generators.

“You have to be fiscally responsible if you’re going to be resilient,” Pratchett explained. He’s one of the founding fathers of high-tech data centers in Oregon’s high desert. Before he arrived at Facebook, he helped start a data center in The Dalles, Ore., for Google, one of the first Internet companies to capitalize on the cheap hydroelectric power and cool, dry weather that characterize the vast Eastern portion of America’s ninth largest state.

Plentiful power, of course, is crucial to Facebook’s operation. Server temperature is regulated by a combination of water coolant and huge fans pulling in dry desert air (below) instead of power- and money-gobbling air conditioning units. The target temperature range is between 60 and 82 degrees Fahrenheit. Pratchett’s team has become so good at achieving that target that Prineville now boasts a power efficiency ratio of 1.07, which means that the cooling equipment, lights, and heaters—anything that’s not a computing device—consume just 7 percent of the facility’s total energy usage.

32 iPhones in a Box

Most of the cooling and power goes towards meeting the demands of servers, but some is diverted to a comparatively small app-testing room with implications for reducing power use not just in Prineville, but on every phone with a Facebook app.

It’s no secret that the Facebook app has struggled with power management. The Guardian claimed earlier this year that the app was decreasing battery life on iPhones by 15 percent. To streamline the app and decrease its battery consumption, the company brought approximately 2,000 iOS and Android phones to Prineville, where they are installed inside boxes in groups of 32.

Each box has its own Wi-Fi network, hemmed in by insulated walls and copper tape, that engineers back at headquarters in Silicon Valley use to deliver a battery of tests. They’re looking for “regressions” in battery use and performance caused by updates to the Facebook, Instagram, Messenger, and WhatsApp apps. If they find one in Facebook’s own code, they fix it. If the inefficiency was introduced by a developer, their system automatically notifies the offender via email.

The boxes don’t just contain the latest iPhone 6s or Galaxy S7. On the iOS side, they include many models from iPhone 4s and later. On the Android side, who knows? Suffice it to say there’s one guy whose job it is to find obscure Android devices and bring them to Prineville.

“We have to source phones from everywhere,” said Antoine Reversat, an engineer who helped develop the testing infrastructure and software. His ultimate goal is to double the racks’ capacity to hold up to 62 phones each, and figure out a way to reduce the 20-step manual procedure required to configure the phone for testing to one step. That should comfort anyone who’s ever had their phone die while refreshing their news feed.

Big Sur: Facebook’s AI brain

Next up on our tour were the Big Sur artificial intelligence servers, which, like the California coastline for which they are named, arguably make up the most scenic part of the data center. We’re not talking sweeping Pacific vistas, of course, but instead a computer screen that displayed what appeared to be a group of paintings, manned by Ian Buck, Nvidia’s vice president of accelerated computing.

“We trained a neural network to recognize paintings,” Buck explained. “After about a half-hour of looking at the paintings, it’s able to start generating its own.”

The ones on display resembled 19th century French impressionism, but with a twist. If you want a combination of a fruit still life and a river landscape, Big Sur will paint it for you by flexing its 40 petaflops of processing power per server. It’s one of several AI systems that use Nvidia GPUs—in this case, PCI.e-based Tesla M40 processors—to solve complex machine-learning problems. Google’s DeepMind healthcare project also uses Nvidia’s processors.

Facebook is using Big Sur to read stories, identify faces in photos, answer questions about scenes, play games, and learn routines, among other tasks. It’s also open-sourcing the software, offering other developers a blueprint for how to set up their own AI-specific infrastructure. The hardware includes eight Tesla M40 GPU boards of up to 33 watts each. All of the components are easily accessible within a server drawer to increase the ease of upgrades, which are sure to come as Nvidia releases new PCI.e versions of its Tesla GPUs, including the flagship P100.

Cold Storage for Forgotten Photos

Since Prineville is Facebook’s first data center, it tends to be where engineers test out ideas to make Facebook’s servers more efficient. One of the latest ideas is cold storage, or dedicated servers that store anything on Facebook that doesn’t get a lot of views—your high school prom photos, for instance, or those dozens of status updates you posted when Michael Jackson died in 2009.

Cold storage’s secret to success is its multiple tiers. The first level is made up of high-performance enterprise hardware, while lower tiers use cheaper components, and in some cases—perhaps for those photos of you with braces that you hope no one ever sees—backup tape libraries. The end result is that each cold storage server rack can hold 2 petabytes of storage, or the equivalent of 4,000 stacks of pennies that reach all the way to the moon.

Building for the Future

Although Facebook has brought faster Internet and other benefits to Prineville, including more than $1 million in donations to local schools and charities, its presence is not without controversy. Oregon is giving up a lot of money in tax breaks to the company at a time when the local economy, still reeling from the 2008 housing bubble, trails the national average.

Still, 1.65 billion people would not have access to Facebook without this desert outpost and its sister data centers. And even as it puts the finishing touches on its fourth Prineville building, the company is well underway towards completing a fifth center in Texas. But if that makes you feel guilty about the energy your Facebook browsing consumes, it shouldn’t. According to Pratchett, a single user browsing Facebook for an entire year consumes less energy than is required to make a latte. In other words, you can forgo just one trip to Starbucks and scroll through your news feed to your heart’s content.