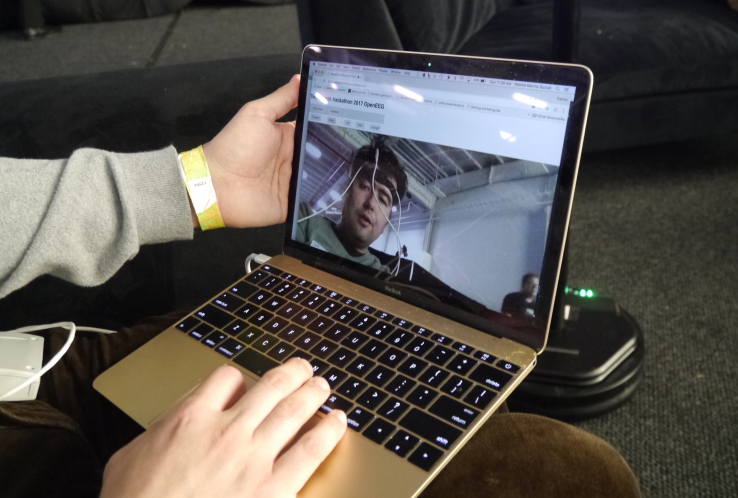

Hardware is hard at the best of times. But software developer Patrick Morris-Suzuki set himself the ambitious challenge of creating a blink-detecting system for hands-free typing during an overnight hackathon here at TechCrunch Disrupt New York 2017.

The hack was inspired by Facebook’s demo at F8 last month, when the company revealed it’s working on building a brain-computer interface.

Clearly a hackathon is not the place for playing around with brain implants. Instead, Morris-Suzuki decided to build a low-cost wearable to pick up what he thought should be a relatively easy signal to track (blinking).

As it turned out, it was still challenging — and he had trouble demoing the blink detection onstage. “The blink detection is simple thresholding, which works when it works!” he said in a backstage interview with TechCrunch.

The hack uses a $100 open EEG-SMT (electroencephalogram) device made by Olimex and a couple of electrodes clipped via wires to the front of a headband.

The core idea is to make a headset that could help disabled people with limited manual mobility to type or interact with other systems just by blinking — using blinks as a selector signal to move and action an on-screen selector.

For the typing use-case a predictive text system also reduces the number of blinks needed to spell what you want to say.

“If you take people for example with ALS, so if you take Stephen Hawking… he actually uses something mounted to his cheeks because he can still use his cheeks. He used to use something that he would tap with his finger but he can’t do it anymore,” says Morris-Suzuki.

The digital keyboard he built for the system has just 12 keys presented in a basic HTML layout. These can be used to send messages via a two-way chat powered by PubNub.

For the predictive functionality he says he used an open source random text generator for training character recurrent neural network intensifiers — adapted to “get the probabilities out, so that you can try to block things by probabilities,” as he puts it.

“It was based on that and I just extracted the probabilities to try to recursively build up the tree structure of the keyboard,” he explains.

He also decided to include the ability to “blink-drive” a telepresence robot, via a set of back/forward/left/right navigation buttons. For this, he says he was using the Firebase API.

However, as noted above, the signals pulled via the EEG device turned out to be pretty noisy. And he was unable to get it working properly during his one-minute demo. Though he was able to demo typing on the keyboard via blinks during a backstage chat.

“I kind of hoped that the blinks would be more reliable,” he says. “They were being more reliable [earlier]. It’s the most reliable signal in general. Because it’s actually not really brain, it’s more like muscles.

“If you read the research, there’s lots of research on these techniques — this thing called SSEP — and they claim like 90 percent accuracy. I don’t know how that’s possible… I think part of it has to do with very, very controlled conditions, and very expensive equipment.

“They’re obviously not putting people up on stage when they’re trying it. They’re also using professional EEG equipment which I think starts around $8,000. Which is a lot,” he adds.