This story began when I was giving a TV interview about a recent incident where police in the U.S. obtained a search warrant for an Amazon Echo owned by a murder suspect in the hopes that it would help convict him — or at least shed light on the case (the case is not relevant to our story, but here’s an article about it, anyway).

The Amazon Echo, a smart helper running Alexa (I know I’m going to make some of my friends at Amazon angry by writing it, but Alexa is Amazon’s Siri), is a very cool device that can do anything from playing music to ordering things from Amazon. To activate it, you just have to say “Alexa” and then ask for whatever it is that you want: “Alexa, please wake me up tomorrow at 5 am.“

The day after my interview, several people told me that every time I said “Alexa” on TV (and, as you can imagine, I said it several times during that interview), their device turned on and entered a waiting-for-your-commend mode.

It took me a few seconds to grasp the meaning of what they had just told me… and then I had one of those OMG moments. This is exactly the kind of thing I love to hear. Immediately I started planning what I would say to mess with Echo owners who were unlucky enough to be watching the next time I was on TV. Actually, I don’t even need them to watch — I just need them to leave the TV on.

Finding the best way to mess with Echo owners

In the process of learning more about Alexa’s capabilities, I found an extensive list of commands that work with Alexa; I also consulted several Echo owners.

My initial idea was to make Echo play the scariest and noisiest heavy metal song I could find (“Alexa, please play Behemoth Slaves Shall Serve!”). Then I thought — maybe I could have Echo order something interesting from Amazon: “Alexa, please buy a long white wedding dress.”

This would definitely create some interesting situations.

Then I thought, maybe I could make Alexa run dubious internet searches that might cause the police to knock on Echo owners’ doors:

“Alexa, what do you do when your neighbor just bought a set of drums?”

“Alexa, where can I get ear plugs urgently?”

“Alexa, how many pills would kill a person?”

“Alexa, how do you hide a body?”

As I started getting more into the Echo command possibilities, I realized there is an interesting attack vector here — and it is bigger than messing with people over the TV.

Echo, personal data and much more

Many use Echo to add items to their to-do list, their schedule, their shopping list, etc. Advanced users also can install plug-ins that connect Alexa to other resources, such as their Google Drive and Gmail. As expected, Echo also can retrieve these items and read them out loud.

And because Echo has no user identification process, this actually means that anyone within talking range can get access to Echo users’ sensitive emails, documents and other personal data.

The world of IoT was destined to introduce problems of a new type. And here they are.

And it goes on. Alexa has a kit that allows it to control smart houses. There are alarm systems that you can turn on and off with voice commands and, in some cases, even unlock the front door.

The fact that Alexa doesn’t identify users means that for “her,” all us humans are the same. Actually, you don’t even need a real person to speak. In an experiment that we’ve made, we controlled Alexa by using a text to speech system, and it worked wonderfully.

Why Alexa?

Though other companies have introduced personal helpers, Alexa is unique in the sense that it is open and has many interfaces and plug-ins. Even Hyundai integrates Alexa in some of its cars, which means you can control its locks, climate, lights and horn, and even turn it on with voice commands.

The death of secure physical boundaries

But there is a much greater issue here. And it relates to the fact that placing an Echo device in a physically secured environment does not actually mean that threat actors cannot access it.

The world of IoT was destined to introduce problems of a new type. And here they are.

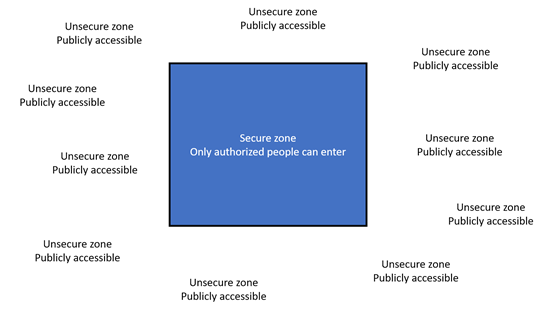

Until now, we have (generally) divided the world into two sections: secured areas and unsecured areas. And we had straightforward means to facilitate the separation between the two: walls, barbed-wire fences, gates, guards, cameras, alarm systems, etc.

But now, devices like Alexa force us to define a new type of physical perimeter — the “vocal” perimeter. This perimeter has different boarders that are much harder to define and measure because they are not definite. They are derived from the power of your sound source. For example, for a human with no additional voice amplification tools, the vocal perimeter might look like this:

But if you have a truck with huge speakers on it and a microphone, you might be able to drive down the street and operate all the Alexa devices around:

Vocal perimeter security solutions?

Wi-Fi networks suffer from a similar problem. Companies that wanted to implement Wi-Fi networks had to deal with the fact that they might be accessible from outside their physical office. But with Wi-Fi, we had a simple solution — encryption.

This is obviously not relevant to voice-operated devices. You cannot encrypt the conversation between the user and the device. Or can you? No, you can’t.

Nevertheless, an obvious solution to this problem is to integrate a biometric vocal identification system in Alexa. If done, Alexa could just ignore you if you are not an authorized user. But this is not an easy thing to accomplish. Even though the world of biometric vocal identification constantly innovates and improves, there are still challenges and vocal identification solutions are still struggling with a bad false positives versus false negative ratio.

And then there is Adobe’s VOCO. This amazing application can actually synthesize human voices (watch the demo video).

So there is nothing that can be done?

There is always an inherent trade-off between security and user experience. You can set up Alexa to only operate when you press a button, but that makes no sense.

We could implement a password request for any command, but this is stupid — you cannot say the password out loud; obviously it is not going to work:

Me: “Alexa, 23Dd%%2ew, what’s the time?”

Alexa: “23Dd%%2ew is the wrong password”

Me: “Arrrrr I meant capital W”

Alexa: “ArrrrrImeantcapitalW is the wrong password”

At least you can change Amazon’s startup word, but this will only partially mitigate the problem. (By the way, for the Trekkies among us, amazon has recently added the word “Computer” as a wake word. Yes. You’re welcome.)

Well, at least Echo doesn’t have a camera integrated into it. Yet.

Bottom line, Amazon and other vocally controlled device manufacturers will have to integrate vocal identification in the years to come.

Additional thoughts…

Should we ban Hollywood from producing movies that have a character going by the name “Alexa”? What if Alexa the character is watching her boyfriend in trouble and in his last breath he yells “Alexa, call 911!”

Funny enough, after starting to work on this article, a TV anchor accidentally said “Alexa, order me a dollhouse.” And it did. For many people.

Call for action: If you are an entrepreneur, build a startup company with a product that solves this issue (vocal firewall?). Sell it to Amazon. Make billions.

Thanks to all Amazon Echo users who help in the process, and specifically to Uri Shamay.

Bonus links

If you are unconvinced that vocal identification is a complicated thing, you can watch this video. Or this one (warning: explicit language!).

And how can we end without XKCD: https://xkcd.com/1807/