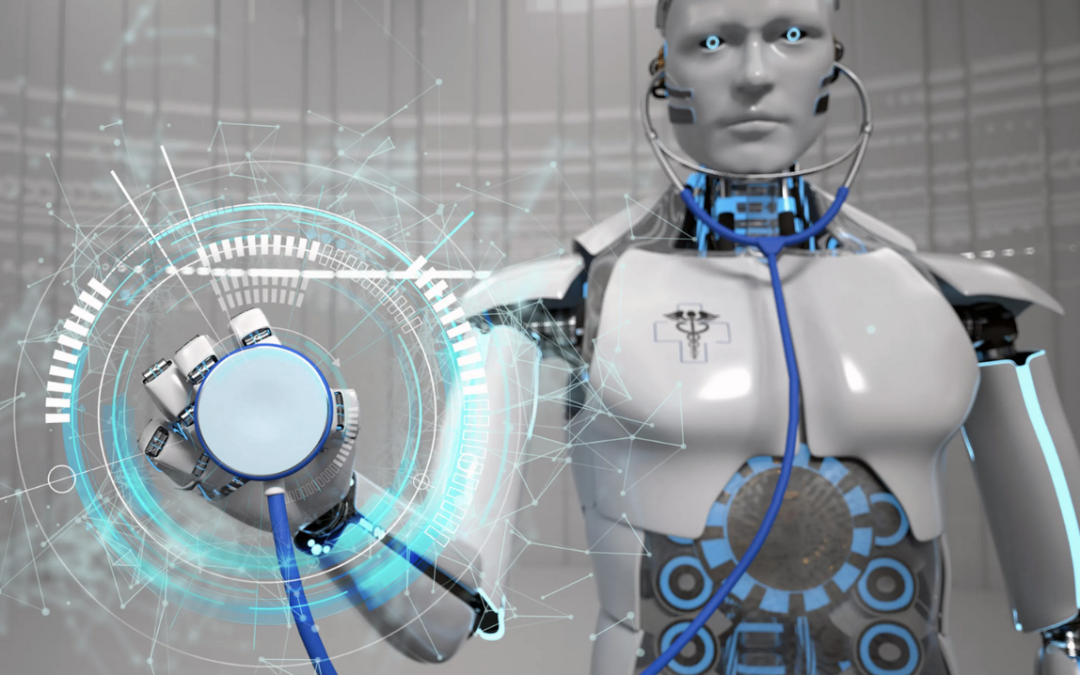

These days, ChatGPT is a hot topic of conversation, with some embracing the ever-developing AI-driven technology and some urging caution about how and where it should be used. New research has found that, compared to human doctors, ChatGPT is more empathetic and provides higher-quality responses to real-world health questions, begging the question: are doctors replaceable?

Virtual healthcare – itself a form of AI – took off at the height of the COVID-19 pandemic as a way of providing people with access to medical professionals while they were in isolation or lockdown.

But the rise of virtual healthcare during the pandemic put additional strain on doctors, who saw a 1.6-fold increase in electronic patient messages, with each message adding more than two minutes of work in the patient’s electronic record and additional after-hours work. The increased workload led to 62% of US doctors reporting symptoms of burnout – a record high – and increased the likelihood that patients’ messages would go unanswered.

This prompted researchers from the University of California (UC) San Diego to consider the role of AI, particularly ChatGPT, in medicine. They wanted to know whether ChatGPT could respond accurately to the types of questions patients sent to their doctors.

“ChatGPT might be able to pass a medical licensing exam,” said co-author Dr Davey Smith, physician and professor at the UC San Diego School of Medicine, “but directly answering patient questions accurately and empathetically is a different ballgame.”

To obtain a large, diverse sample group, the research team turned to Reddit’s AskDocs subreddit (r/AskDocs), which has more than 480,000 members who post medical questions that licensed, verified healthcare professionals answer.

The researchers randomly sampled 195 unique exchanges from r/AskDocs, fed the original question into ChatGPT (version 3.5) and asked it to generate a response. Three licensed healthcare professionals assessed each question and the corresponding response, not knowing whether it came from a doctor or ChatGPT.

The evaluators were first asked which response was “better”. Then they had to rate the quality of the information provided (very poor, poor, acceptable, good, or very good) and the empathy or bedside manner provided (not empathetic, slightly empathetic, moderately empathetic, empathetic, very empathetic). The evaluators preferred the ChatGPT responses 79% of the time.

“ChatGPT messages responded with nuanced and accurate information that often addressed more aspects of the patient’s questions than physician responses,” said co-author Jessica Kelley.

ChatGPT was also found to provide higher-quality responses. ‘Good’ or ‘very good’ responses were 3.6 times higher for ChatGPT than for doctors (ChatGPT 78.5% versus doctors 22.1%). And the AI was more empathetic. ‘Empathetic’ or ‘very empathetic’ responses were 9.8 times higher for ChatGPT than for doctors (ChatGPT 45.1% versus doctors 4.6%).

This doesn’t mean doctors are indispensable, say the researchers; they need to embrace ChatGPT as a learning tool to improve their practice.

“While our study pitted ChatGPT against physicians, the ultimate solution isn’t throwing your doctor out altogether,” said study co-author Adam Poliak. “Instead, a physician harnessing ChatGPT is the answer for better and empathetic care.”

The researchers say investing in ChatGPT and other AI would positively impact patient health and doctor performance.

“We could use these technologies to train doctors in patient-centered communication, eliminate health disparities suffered by minority populations who often seek healthcare via messaging, build new medical safety systems, and assist doctors by delivering higher quality and more efficient care,” said co-author Mark Dredze.

As a result of the study’s findings, the researchers are looking at undertaking randomized controlled trials to judge how using AI in medicine affects outcomes for both doctors and patients.